Since the release of version 2.6.13 in August 2005, the Linux kernel has support for adjusting the frequency of the timer interrupt via the CONFIG_HZ compile-time option. This setting can have a considerable impact on overall system latency and throughput in certain scenarios. In this article, its impact on KVM will be explored.

See Also: Meltdown Patch Performance — Benchmarks and Review

Timer Interrupts on x86

The Linux kernel configures the hardware to send a timer interrupt to the kernel every few milliseconds. With CONFIG_HZ=1000, for example, the machine issues a timer interrupt every millisecond. When that same value is set to 250Hz, the machine issues a timer interrupt every 4 milliseconds. Upon receiving a timer interrupt, the kernel drops everything and performs various scheduling and timekeeping related tasks.

Due to this, it is important to correctly tune this value depending on the system’s workload. A higher value can consume slightly more power and sacrifice some throughput in exchange for lower overall system latency. A lower value allows the CPU to idle more and increases timer latency in exchange for throughput and efficiency. An important detail to note is that the timer interrupt is fired for each CPU core. As such, a higher core count, especially in relation to NUMA, worsens the effects of a higher timer frequency on throughput.

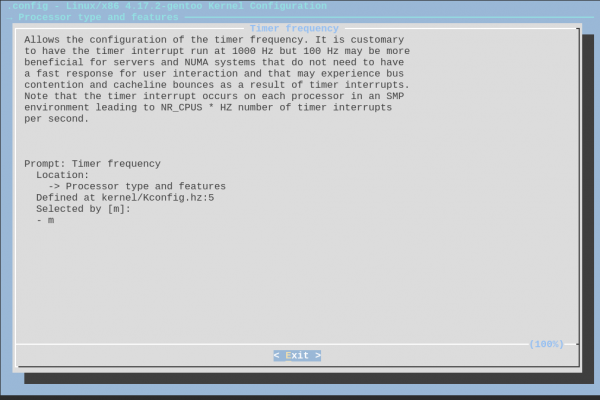

Changing the Interrupt Frequency

The CONFIG_HZ option resides under Processor type and features -> Timer frequency in make menuconfig. Consult your distribution’s documentation for information on manually compiling the kernel.

The linux kernel package included with Arch Linux ships with CONFIG_HZ=250, as does Debian’s linux-image-amd64. The upstream Linux default is CONFIG_HZ=250. My Gentoo system appears to have defaulted to 1000Hz under sys-kernel/gentoo-sources. The kernel source includes options for 100Hz, 250Hz, 300Hz, and 1000Hz.

The Benchmark Setup

The author’s machine runs Gentoo Linux ~amd64 with Linux 4.17.10 and consists of:

- an AMD Ryzen 2700X (with its stock Wraith Prism cooler),

- 16GB of DDR4-2400 running in dual-channel mode,

- a Gigabyte Aorus Gaming Ultra X470 motherboard,

- a Samsung 850 Evo 500GB drive, and

- an EVGA GeForce GTX 1050 Ti FTW.

No overclocks were applied and XFR2 remained enabled. The virtualization setup consists of:

- QEMU 2.12.0 (with the AMD SMT, PulseAudio, and CPU Pinning patches applied),

- LibVirt 4.5.0,

- a Windows 10 1607 guest (with all updates applied as of late July 2018),

- Windows NVIDIA driver 398.36,

- Windows VirtIO drivers 0.1.141,

- VirtIO SCSI raw image passthrough with cache disabled and iomode=threads,

- 6C/12T of 8C/16T passed through to the guest with CPU pinning and isolcpus.

Results

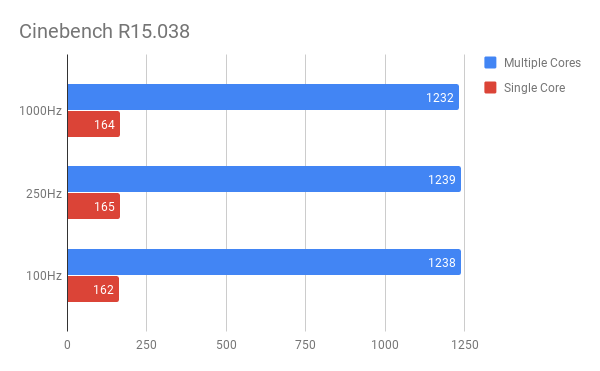

Cinebench’s CPU benchmark shows results well within the margin of error. Results show average of 12 consecutive runs.

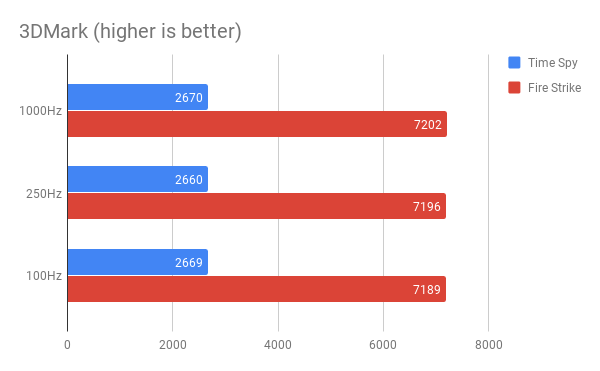

In the more CPU-bound Fire Strike test, higher tickrate kernels maintain a slight edge. Time Spy’s results were well within margin of error.

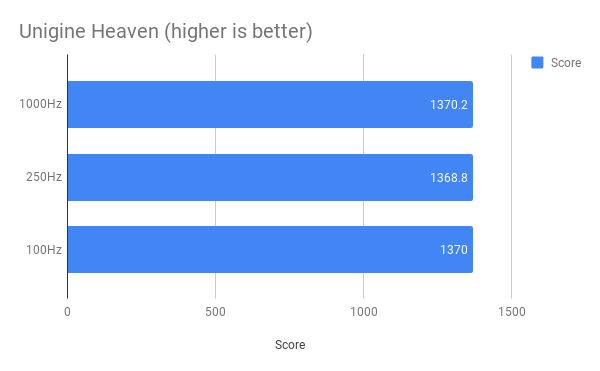

In GPU-dependent benchmarks such as Unigine Heaven, CONFIG_HZ makes no difference. Results show the average of 5 consecutive runs. Tests utilized DirectX 11 and ran at 1080p with high quality, normal tessellation, and 4x anti-aliasing.

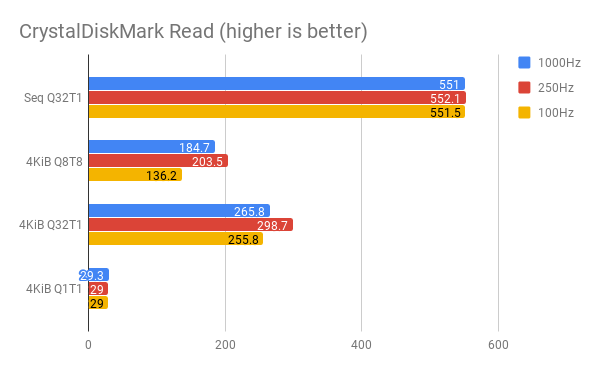

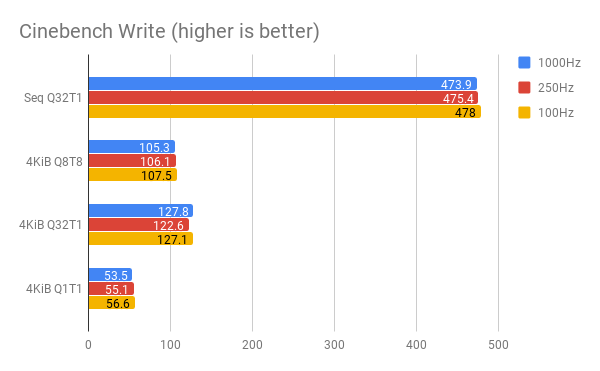

Disk I/O benchmarks heavily depend on the storage driver and host filesystem (if applicable). CrystalDiskMark’s results show almost no difference across runs, with the exception of a lead for 250Hz on 4KiB Q8T8 and Q32T1 read benchmarks. Results show average of 3 runs. Rebooting the host machine between runs ensured that caches did not influence the results.

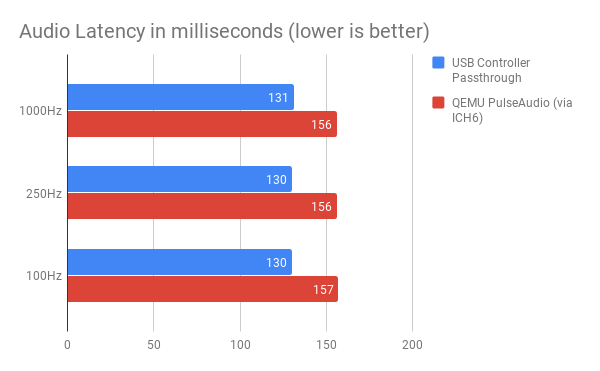

This result was certainly interesting. Using the Audacity latency test guide, latency from output to input was measured. The results show no difference between the kernel configurations. Defaults remained set for QEMU’s PulseAudio environment variables.

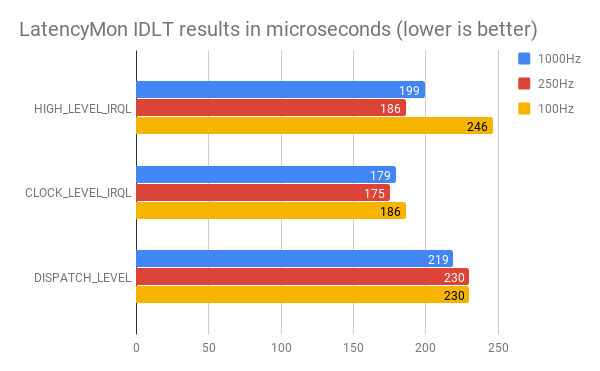

The 100Hz kernel consistently did the worst in LatencyMon’s In-Depth Latency Test suite, although by a small margin. 250Hz and 1000Hz kernels net similar results. Tests were run for 5 minutes each.

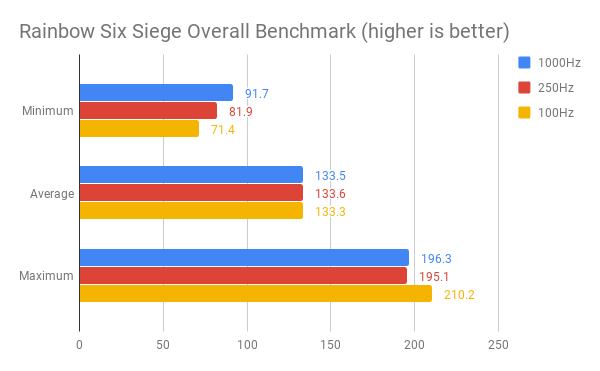

Siege’s results were quite interesting. Overall, 1000Hz nets better minimum framerates, while 100Hz nets better maximum framerates. Average framerates are no different across CONFIG_HZ settings. Benchmark was run 6 times per CONFIG_HZ setting and results were averaged out. All settings remained at the lowest preset with the exception of medium texture quality, 4x anisotropic texture filtering, and medium shading quality. The low graphical settings helped put more load on the CPU rather than the GPU.

Conclusion

If you’re compiling your kernel from source, it is advisable to pick 1000Hz over 100Hz or 250Hz in order to receive a small but tangible minimum framerate improvement while gaming. On the other hand, if you have a very I/O heavy workload, CONFIG_HZ=250 (or even 300) might be a better pick. However, if your distribution comes with a precompiled kernel out of the box, the benefits likely do not justify maintaining a custom kernel configuration and recompiling with each upgrade.

Looking to replace that “high-end” 3770K that doesn’t support VT-d? Want to avoid buying the wrong board? Take a look at our VFIO Increments page. Need support? Join our Discord.

Images courtesy PixaBay