QEMU supports many different types of virtualized disks. Throughput, latency, and overhead vary greatly depending on the disk type. Here’s a rundown of all the options, their benefits, and their drawbacks.

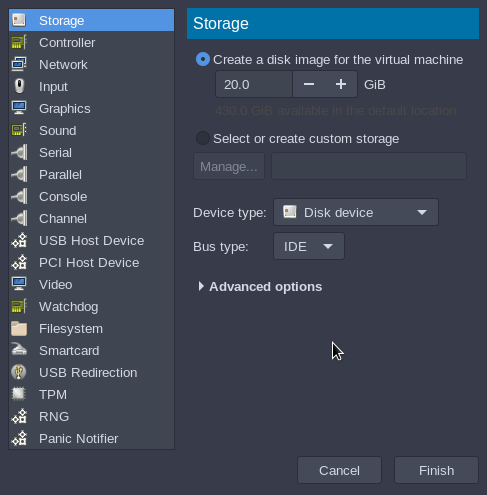

IDE/SATA

QEMU primarily supports two emulated disk controllers: IDE and SATA. The advantage of emulation is wide guest OS support. The guest sees a generic physical controller and does not need special drivers. The disadvantage of emulation is performance – generally throughput is low, latency is high, and it wastes CPU horsepower. Of the two, SATA is generally more favorable, though older guests, particularly Windows XP, may only recognize IDE controllers.

USB

USB disks are fairly simple to explain; upon passing them through, the guest sees a USB mass storage device. This is useful for things such as booting off of a USB “img” file. Usually they are not used as permanent storage devices; they are still emulated and don’t perform particularly well compared to the next option.

VirtIO

VirtIO is by far the best choice for performance, throughput, and overhead. It requires special drivers in the guest to function. Windows drivers are available from Fedora, and Linux has included VirtIO guest support since kernel 2.6.25. Instead of emulating a physical controller, VirtIO makes the guest aware that it is running under a virtual machine. This is known as paravirtualization. In paravirtualization, the guest shares a portion of RAM with the host machine and uses this to communicate I/O. This is much faster than emulation and wastes much less CPU. It also decreases the latency of I/O accesses.

An important thing to note is that VirtIO is not limited to disks. Network, disk, serial, input, and random number generation are among the things VirtIO can accelerate.

VirtIO SCSI

The default “virtio” in libvirt is virtio-blk. Another type of virtio disks exists – virtio-scsi. This is newer and recommended over the older virtio-blk. VirtIO SCSI is also capable of sending discard commands to the host filesystem. This informs the host which blocks are in use and which blocks to TRIM if the underlying storage device is a solid state disk.

Full Block Device vs Image File

Passing through a block device directly can perform slightly better than creating an image file. This depends heavily on the filesystem the image file is created on, however. On EXT4, XFS, and ZFS, for example, the overhead is relatively low and performance should be very similar. Filesystems such as BTRFS, however, do not perform well for image file passthrough. FUSE filesystems like NTFS should be out of the question.

Direct SATA Controller Passthrough via vfio-pci

SATA controllers on all PCs run on the PCI bus. You can plug a spare SATA controller in and pass it through; all connected disks will pass directly to the guest. This solution has almost no latency or overhead and offers the highest throughput. The disadvantage of this solution is that you will need a second SATA controller dedicated solely to the VM. The disks will also be unavailable on the host while the guest is running.

Direct NVMe Drive Passthrough via vfio-pci

Similarly to SATA controller passthrough, passing through an NVMe drive also helps performance. In fact, the performance boost is even higher due to NVMe drives’ insane throughput. Overhead is much lower as well.

RAW vs QCow2

The format of the data also makes quite a difference. RAW images are considerably faster than QCow2 images, which are libvirt’s default on most distributions. While QCow2 images support snapshots, they can degrade performance even further. For snapshots without a performance loss, one can use the ZFS filesystem‘s built-in snapshots or even ZVols.

Looking to build a machine to use with device assignment? Check out the VFIO Increments page. Looking for support? Check out our Discord.

Images courtesy PixaBay