There’s been a lot of speculation in the press about the bandwidth and connection requirements of Stadia, Google’s upcoming game streaming service.

Criticisms usually arrive at metered connections or bad infrastructure in rural areas for key talking points. There’s also been some discussion of video quality and latency built into systems like Stadia, but surprisingly, no one seems willing or able to go into detail about another key element: the computational cost of encoding.

Consider supporting us on Patreon if you value independent tech news and research. This site will always be free of ads and sponsored content.

Any twitch streamer or video editor can tell you that encoding high bitrate video in real time is a nontrivial challenge, even on current hardware. Of course, a company as large as Google has the resources to make it happen, but the computational load of that process is still significant, and there’s no magic wand that can wave it away.

There’s a lot that can be learned about Stadia by examining the resources required to deliver 1:1 on demand streaming to thousands of clients simultaneously, so we’ve put together some information and qualitative analyses that we think offer a new perspective on how Google’s game streaming service will work.

Understanding Stadia Means Understanding Video Codecs

Before we can talk about Stadia, we need to provide some basic information on video codecs as a foundation for what we’ll be discussing later. If you’re familiar with the nuts and bolts of video encoding you can skip this section, but if not I recommend you at least skim through it.

Video Codecs are generally split into two separate categories. Intraframe and Interframe. Sometimes erroneously referred to as “lossless” and “lossy” codecs

- Intraframe codecs treat each frame as a discrete piece of data, and any compression is done the same way it would be done to a still image, frame by frame. File sizes for these codecs are typically much larger. Intraframe codecs are preferred in video production because of the low computational demand and the higher image quality compared to Interframe Codecs . These codecs aren’t usually used in streaming because of the large file sizes.

- Interframe codecs compress both the individual images and the temporal data to radically reduce file sizes. They treat frame sequences in large chunks, converting most of the frames into instructions that tell the video player where to shift pixels rather than displaying a new image. This is the type of codec most relevant to streaming and online media.

In addition to these distinctions, there are other factors to consider, like bitrate, I-frame intervals, and “efficiency,” all of which are relevant to the discussion:

- Bitrate – Usually measured in kbit/s, is a metric that characterises just how much data each second of video takes to store. Because it’s measured in seconds, quality will vary at the same bitrate at different framerates, e.g. 5000kb/s at 30p will be twice the visual quality of 5000kb/s at 60p.

- I-Frame Interval – In interframe codecs, I-Frames are “checkpoints” between the vector data frames that act as a reference, used to warp the P/B-frames before hitting the next I-Frame. The less of these you encode, the smaller your filesizes, but the more likely your video stream is to have blocking artefacts, also called “macroblocking” or “datamoshing.” These artefacts usually make the video stream unintelligible until you hit the next I-frame.

- Codec “efficiency” – This is a marketing term, used to indicate that a codec trades more space for computational load in encoding and playback. The codec isn’t more efficient overall, it’s just trading more of one requirement (file/stream size) for another (computational overhead.)

Not All Codecs Are Created Equal

Another important thing to understand is that every codec performs differently. The most common modern codecs used for streaming, H.264, H.265, VP9, and AV1, all have different trade-offs and places in the technological landscape:

- H.264 – It’s been around for a long time, it encodes and decodes well even on anemic hardware, and it has a decent balance between file size and image quality. Every other codec on this list is a bid to supplant it as the most popular codec, with varying degrees of success.

- H.265 – The successor to H.264, at least tentatively. Most encoder implementations offer around a 50% reduction in file size for the same visual quality, but are about 400-600% slower than H.264. Hardware acceleration helps with this somewhat, but most hardware accelerated solutions limit video bit rate for low latency applications.

- VP9 – About as space efficient as H.265, but most encoders are even slower. Some common hardware accelerated encoding available (Intel.)

- AV1– Technically the most space efficient codec on the list, but every AV1 encoder currently available is hundreds of times slower than H.265 or VP9. No Hardware acceleration available. Not considered viable for any live streaming or latency sensitive use case.

With this in mind, Google is probably using a mix of H.265 and H.264 for Stadia to cover the widest range of hardware. There’s not really any efficient way of doing realtime encoding for the other two, which are much better suited to on-demand video services like YouTube.

Testing Methodology

While the exact specs of whatever edge appliances Google will be using to deliver Stadia aren’t available, we do know they’ll be using AMD’s GPUs and semi-custom silicon to build out their infrastructure. These tests are synthetic and should not be taken as a 1:1 evaluation of Stadia’s encoding performance.

Nvidia’s hardware encoding leads other manufacturers by a significant margin In our testing. NVENC beats both QuickSync-based encoding on intel CPUs and AMD’s UVD/VCE acceleration in terms of maximum supported bitrate at 60p, zero-latency preset for both H.264 and H.265. We only had a Fury on hand, but for the purposes of these benchmarks, we’re assuming that the newer AMD cards have caught up somewhat more than testing would indicate.

Testing was done on:

- A 6700K @4.3GHz

- A pair of Samsung 860 Evo SATA SSDs (one source, one output)

- An EVGA GTX 1070

- A Sapphire Fury Nitro +

- Centos 7.6 with latest drivers.

We used both software and QuickSync for CPU testing, NVENC for Nvidia GPUs and attempted VAAPI for AMD encoding, but could not complete testing, as VAAPI has no support for H.265 encoding. CUVID is deprecated and wasn’t tested.

Source footage is 8-bit 1080p60 4:2:2 YUV frames from a A BMD intensity 4k as well as 2160p60 Footage captured to on a Magewell Capture HDMI 4K Plus LT (scaled down and looped through to the Intensity.)

Transcoding tests were done with ffmpeg, but we validated using Blackmagic’s encoders in resolve for the GPU tests to make sure the results were not significantly affected by outside factors. The GTX 1070 performed slightly worse compared to the ffmpeg results when encoding H.265 in Resolve. Otherwise results were fairly consistent.

We used a range of games, with and without lots of particle effects, and a range of visual styles to get a cross section of what the average demand on an encoder might be. Results are averaged across titles unless otherwise stated. Tested games include:

- Punch planet (restricted palette, fast pace)

- Broforce (restricted palette, particle heavy)

- Dying Light (photorealistic, fast pace)

- Everspace (desaturated palette, particle heavy)

Quantitative Testing

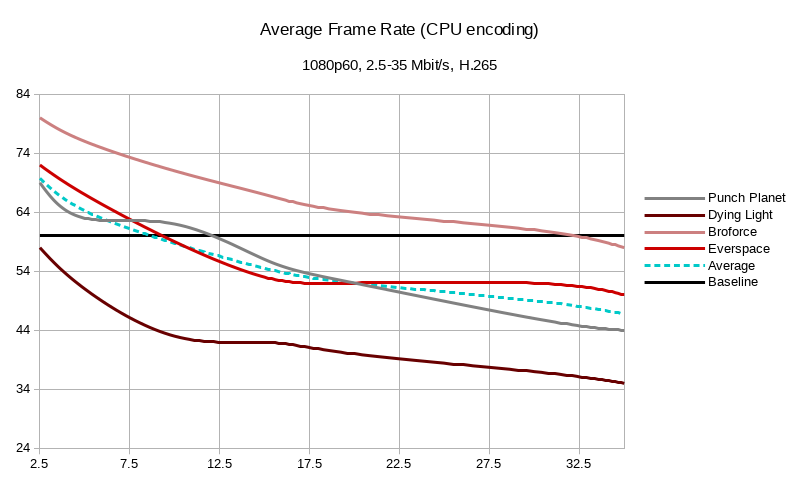

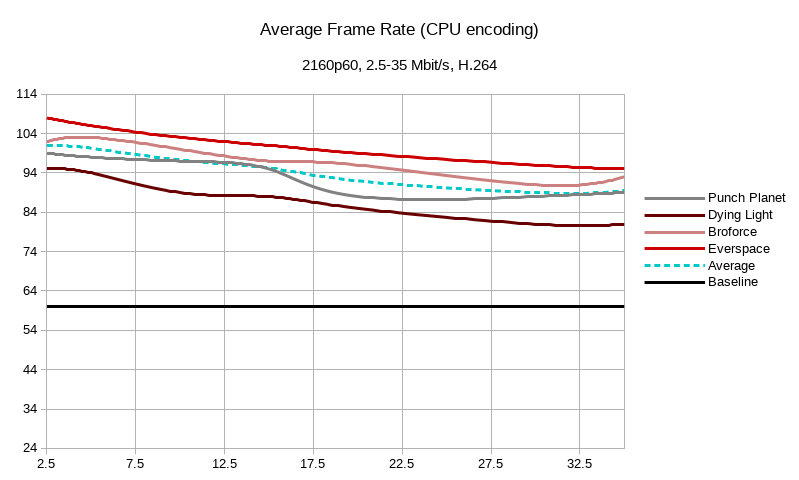

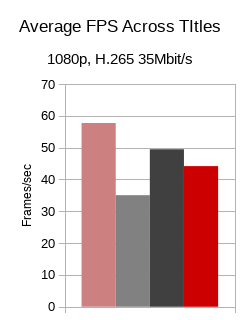

We started by charting average encoding frame rates across a variety of acceleration methods, from 2.5mbit/s to the Stadia recommended maximum bandwidth of 35mbit/s:

1080p still performs fairly well on CPU HEVC encoding.

4k needs H.264 for real-time encoding on all tested hardware.

ffmpeg command for each batch was as follows:

ffmpeg -hwaccel vdpau -i $FOOTAGE.mov -c:v libx264 -b:v $BITRATE -preset ultrafast -tune zerolatency "$FOOTAGE/$BITRATE.mov"

Where $FOOTAGE is an array of source files and $BITRATE is target bitrate in Mbit/s

Settings were changed according to each acceleration method as follows:

#NVENC ffmpeg -i $FOOTAGE.mov -c:v h264_nvenc -rc:v cbr -b:v $BITRATE -preset llhp -zerolatency true -rc:v cbr "$FOOTAGE/$BITRATE.mov" #QuickSync ffmpeg -i $FOOTAGE.mov -c:v h264_qsv -b:v $BITRATE -preset ultrafast -tune zerolatency "$FOOTAGE/$BITRATE.mov" #AMD VAAPI ffmpeg -vaapi_device /dev/dri/renderD128 -i $FOOTAGE.mov vf 'format=nv12,hwupload' -c:v h264_vaapi -b:v $BITRATE -preset ultrafast -tune zerolatency "$FOOTAGE/$BITRATE.mov"

H.265 is largely the same with -c:v and other flags changed to reflect the differences in syntax. Some settings will change with ffmpeg version and hardware so be sure to check the documentation if you want to validate these at home.

Measuring Frame Times

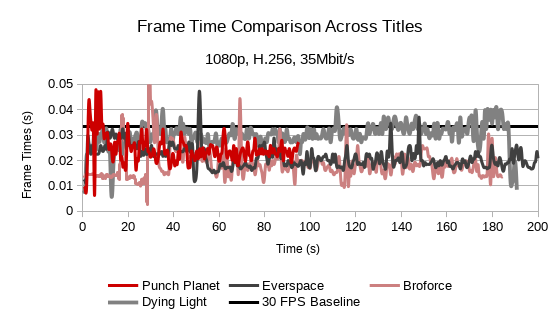

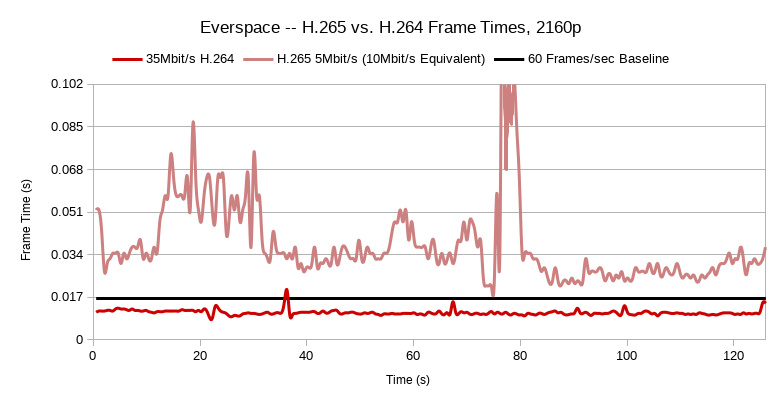

Some of the charts in this article are measured in Frame Times, or the amount of time it takes the encoder to produce each frame. Encoder frametimes must be consistently under .0167 seconds (1/60th of a second or 16.7ms) in order to deliver a decent experience to the user, regardless of how high the GPU frame rates are on Stadia’s remote machines. This metric may be more difficult to understand, but it offers more relevant information than average FPS does, because it shows how “smooth” the experience will be.

As you can see in the charts above, average frame rate can be misleading, because most interframe encoders tend to fluctuate depending on what’s happening on-screen. Every average Framerate clears 30fps. However, only one of the four titles tested (Everspace) would be playable at 30fps when encoding HEVC at 35Mbit/s, Google’s highest advertised connection requirement.

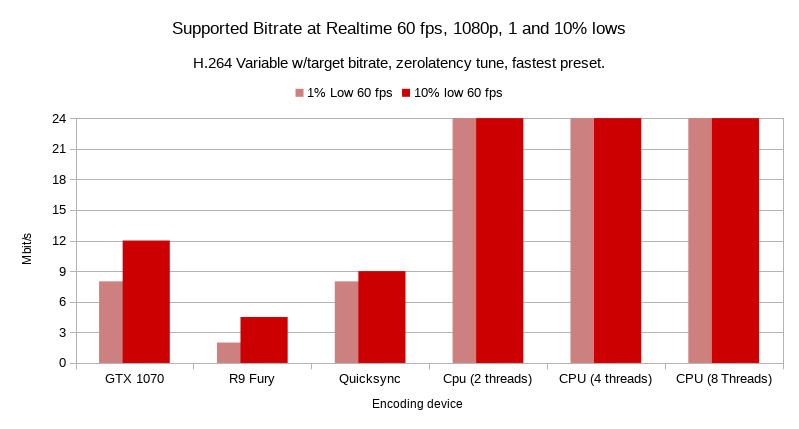

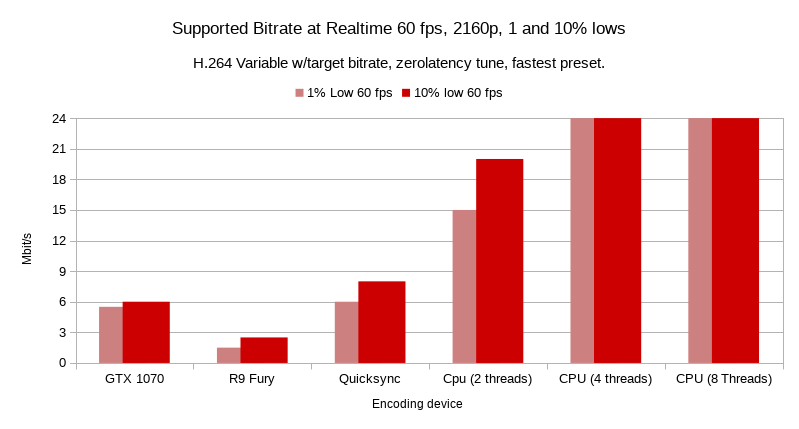

In order to test what bitrates on each encoder are suitable for livestreaming at low latencies, we’ve restricted the criteria to encoding passes that only fall below 60fps when looking at the bottom 10% of reported data. 1% lows are there to demonstrate the maximum bitrate that would offer a “perfect” experience. 500Kbit/s bitrate increments were used when fine tuning these metrics.

This chart really shows the versatility of H.264. If you can give it a few CPU threads, it’ll blow past most hardware implementations in quality on the zero latency preset. The libx264 encoder topped out our bitrate testing, delivering better than realtime at 250Mbit/s on only 2 threads. This isn’t necessarily useful as a metric for Stadia though, as presumably the CPU will be busy running the game being streamed.

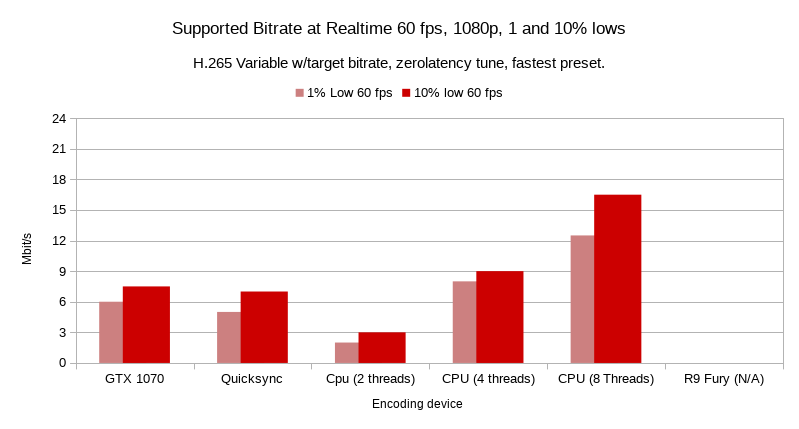

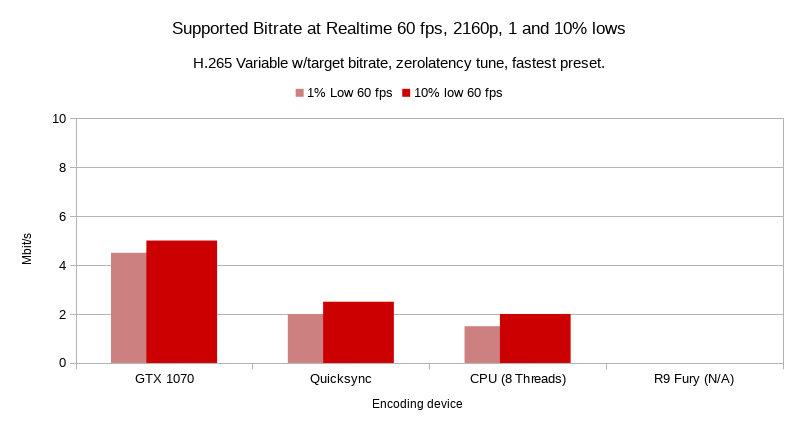

Here, things become a bit more clear cut. H.265 is a much more demanding codec, and the quality improvements aren’t realized to their full potential on modern encoding hardware, because the ability to stream 60p realtime is lessened at higher bitrates.

Even when we move up to 4k, software encoding still seems to be faster for H.264. Even if newer encoders in the Vega and RTX cards made incremental improvements, there’s a hard limit here. This is important because relying on CPU, CUDA or OCL encoding will cut significantly into game performance. This time, 4 thread CPU encoding topped out at 80Mbit/s despite 8 Thread encoding still topping 250.

The limitations expressed in the 1080p tests are reflected here. You lose a lot of the built-in efficiency advantage that H.265 provides due to compute overhead. Nvidia seems to retain a suprisingly high bitrate at realtime despite the increased load, where other encoders start to fall apart. 2 and 4 thread CPU encoding were omitted because neither could sustain 60fps at a reasonable bitrate (above 1Mbit/s)

Unless Google builds out Stadia’s edge devices with custom H.265 encoder asics, 4K will most likely all be streamed in H.264:

Everspace, the least demanding title we tested in terms of consistent frame times, does not reach bitrate parity on any of the hardware tested for H.265 in 4k. If industry leading hardware encoders can’t manage 15Mbit/s 2160p60, it tracks that Google would need 30-35Mbit/s bandwidth to stream decent H.264 4k to Stadia subscribers.

Qualitative Analysis

Charts can only really tell you so much about an interactive experience. If 35Mbit/s video looks terrible in 4k, it changes the context of everything we’ve presented so far. So, let’s look at our games under test up close to see how each type of compression affects the stream.

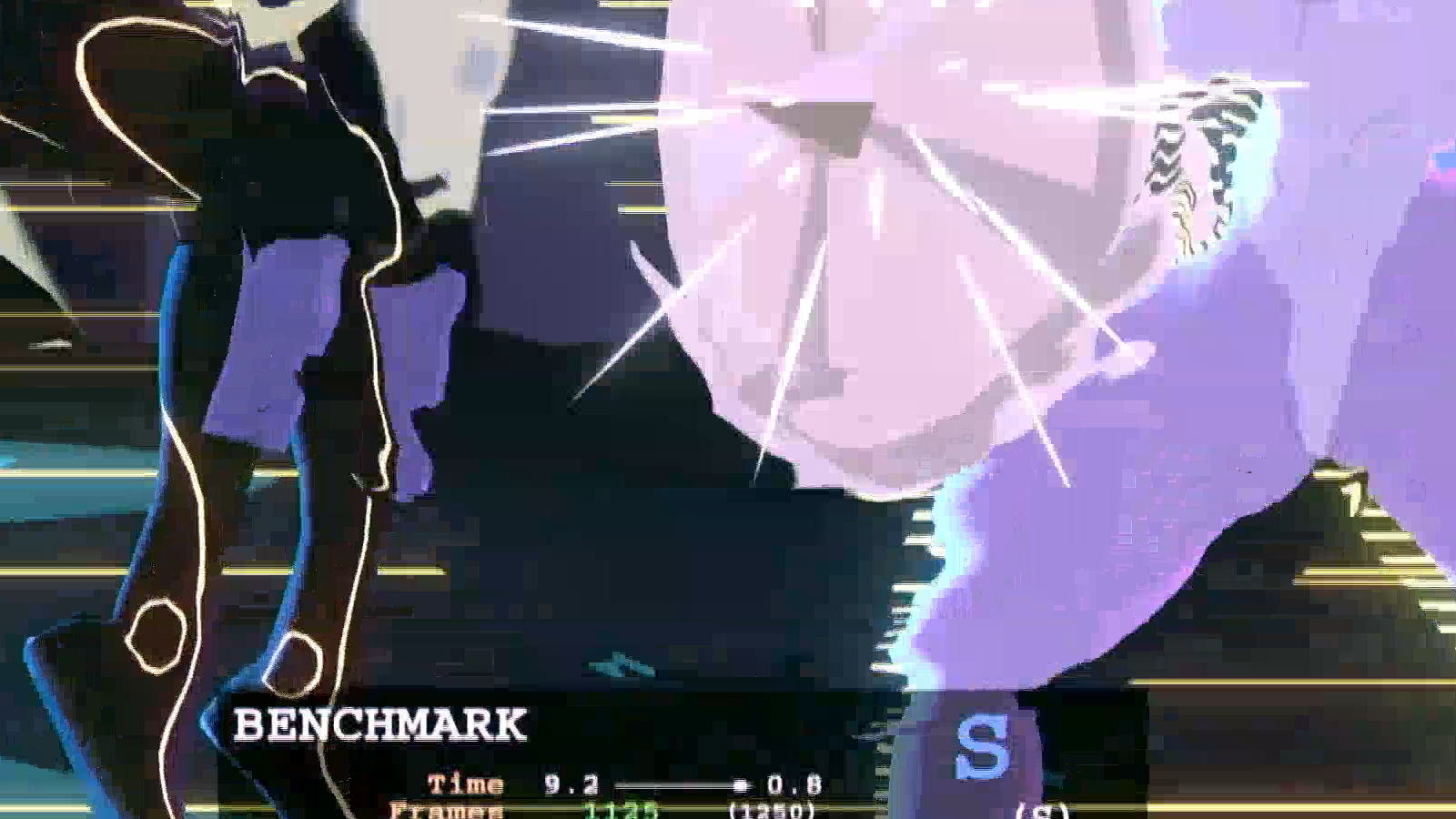

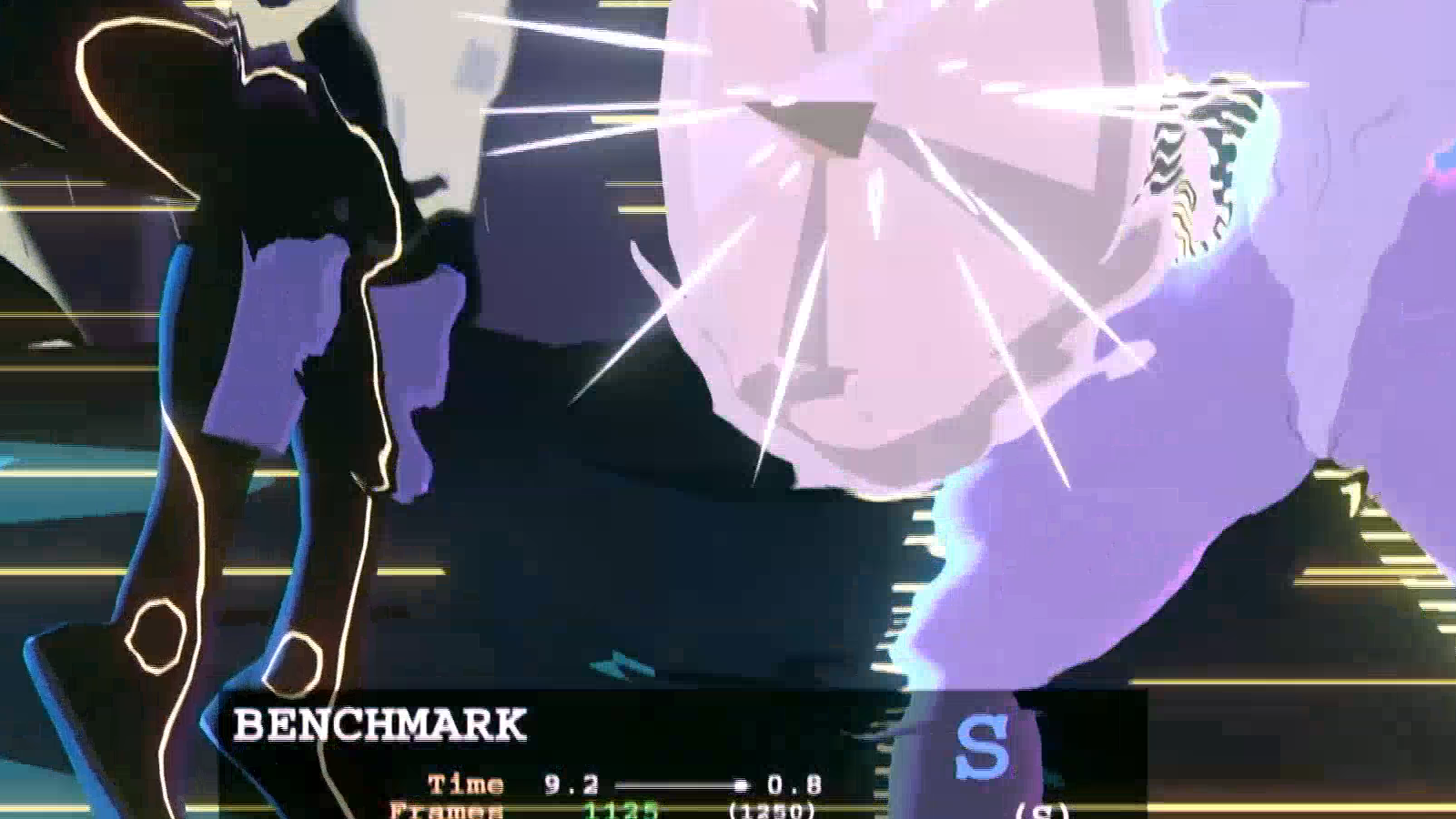

Punch Planet, a game that I thought would not be demanding to encode due to it’s limited palette, lack of extensive particle effects and simplified aesthetic, ended up being the second slowest title on average after dying light. Not only that, but the results seem to be worse quality than other titles when encoding with the same settings.(click or swipe to see each bitrate:)

If we crop in 1:1 from 4k, you can really see each codec falling apart at lower bitrates:

The bottom line here is that Stadia will, at a bare minimum, need to saturate a 20-35 Mbit/s connection to deliver tolerable 4k60 to the consumer. The encoding overhead is so large on every high efficiency codec that most encoders cannot reach bitrates to match H.264 in real time. Slower connections probably won’t be supported until they do.

1080p is where H.265 becomes a bit more interesting. Given the right combination of client hardware and controlled rollout, H.265 (or another high-efficiency codec) will probably be key to their proposed mobile support.

If Nvidia’s last gen hardware encoder can do 5Mbit/s H.265 on a consumer card, then whatever Google’s built out with AMD can probably match that, or come close. If we look at our most demanding test title, Dying Light, we see that acceptable quality can be achieved for 1080p streams on very low bitrate H.265:

2.5 Mbit/s H.265 and 5 Mbit/s can be hard to tell apart on a small screen, and because modern phones ship with good hardware decoders, there’s a good chance that in a few years the pixel flagship devices won’t be the only phones to support Stadia.

If we do a 1:1 crop, you can see that the quality of the H.265 is appreciably better, despite coming from a video stream that takes half the bandwidth:

The quality differences become less noticeable at higher bitrates, but for devices with small screens this is probably how Google plans to deliver. In our testing, we found that hardware encoders seem to top out between 5 and 7 Mbit/s for H.265 1080p, which leaves a lot of room at 10-15 Mbit/s and above for improvement with older codecs on landline connections or on devices connected to a TV or monitor.

Something stills don’t show is how each of these codecs treat motion, so here’s a comparison of one of the less demanding titles tested, Everspace:

Youtube compression will affect results. Watch fullscreen at your native resolution for most accurate comparison. Uncompressed version available to patrons.

To demonstrate the effect of inconsistent frame times, we’ve put together a short video with both the original output from the least demanding game tested and a 2 core render streamed to OBS at a reasonable bitrate:

Stutters or “dropped frames” happen when the encoder can’t keep up with the refresh rate of the client’s device. If you push the bitrate or resolution too high, the game will become unplayable regardless of the game’s frame rate or frame time consistency.

Conclusions

There are a few key facts we’ve learned from this research:

- Most Hardware encoders have a hard upper limit at low latency, but scale better than software encoding at higher resolutions in HEVC.

- High efficiency codecs aren’t suitable for realtime 4k.

- Typically you only get 1 good stream per encoding asic per CPU/GPU.

- Stadia will probably run multiple clients per GPU.

- Consistent frame times are hard to achieve without lots of cores, or low bitrates.

- Encoder frame times are just as important to delivering a good experience as frame rate on the server’s GPU.

As abusive as Stadia will be to metered connections, encoder overhead might pose a problem even for average users. Because 4k will probably only be streamed using CPU H.264 encoding, each additional client using one of the Stadia edge devices will degrade every client’s streaming experience. 3-4 threads to encode per client on top of the ~8 that will be dedicated to running games means up to 50% overhead.

It’s possible that Google got some sort of custom encoding silicon from AMD during the Stadia development phase, but that won’t completely overcome the overhead for 4k streaming. The moment a Stadia device gets oversubscribed, 4k might quietly drop to 1080p on clients to keep things running.

H.265 streaming at higher resolutions is impractical, but delivering Stadia to mobile devices is probably going to work, given the shift in performance on low bitrate H.265 and the availability of mobile devices with hardware decoders.

Stadia won’t work for anyone that isn’t near a major datacenter with a high bandwidth home connection, but it will “work.” Scaling the video encoding up will probably be the biggest roadblock to increasing adoption, because internet infrastructure is stagnating in a lot of markets and the only way to bring the service to more people will be to reduce the bandwidth needed to stream live video at a tolerable quality.

At the prices and bandwidth requirements quoted by google for 4k streaming, I don’t see subscriptions covering the infrastructure costs of the service. Bringing it to more people quickly with better encoding hardware will probably be the largest factor in keeping Stadia alive. Google isn’t afraid of killing off their services after just a few years, though, so maybe Stadia will be the next Waves, Allo, Picasa, or Google plus.

Consider Supporting us on Patreon if you like our work, and if you need help or have questions about any of our articles, you can find us on our Discord. We provide RSS feeds as well as regular updates on Twitter if you want to be the first to know about the next part in this series or other projects we’re working on.